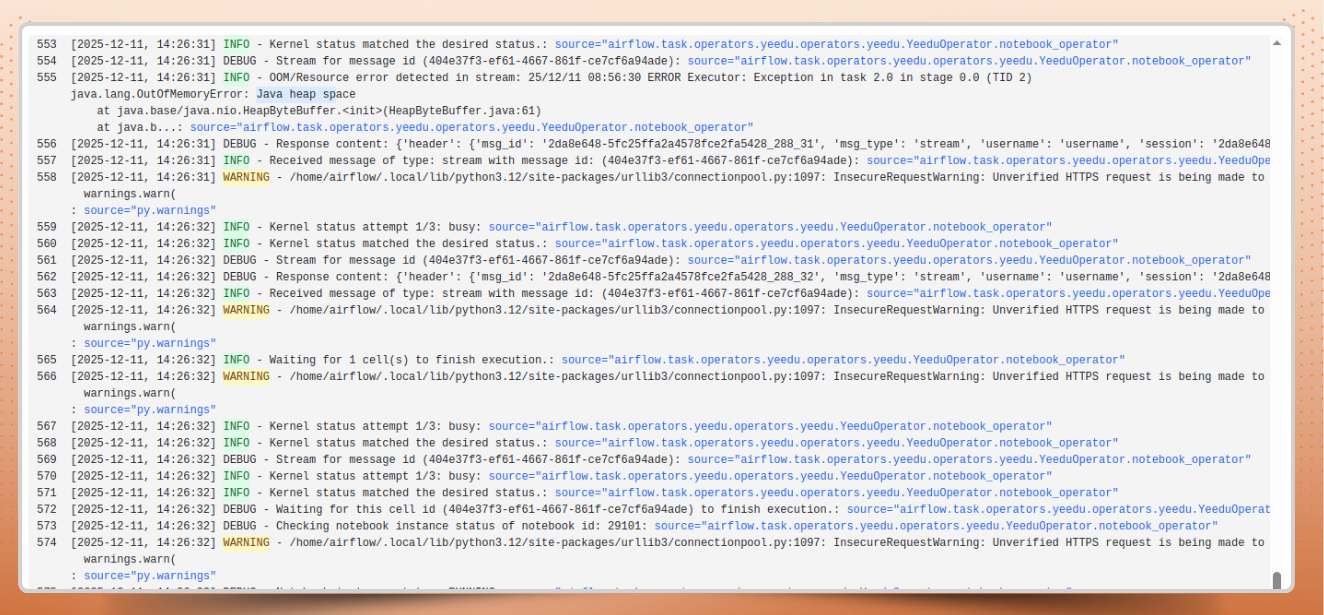

When running Apache Spark at scale, performance tuning can feel like a guessing game especially without effective Spark cluster autoscaling in place. You launch your job on what seems like a reasonable cluster, say, 4 cores and 16 GB of memory and everything looks good for a while. Then, halfway through execution, you’re hit with an OutOfMemory error or any other resource exhaustion error. Your clean, efficient workflow turns into a manual debugging session.

You double the memory, resubmit the job, and hope it works this time. Maybe it does. Maybe it doesn’t. And so, the cycle continues a frustrating, time-consuming loop for data teams who just want predictable performance and better overall spark cluster optimization.

Yeedu changes that.

Every Spark workload is unique. The right cluster configuration for one job may be totally insufficient for another. Datasets vary in size, transformations differ in complexity, and shuffles can spike memory usage unexpectedly.

Most engineers deal with this reactively:

Without intelligent spark cluster autoscaling, this cycle repeats itself.

While that might work in development, it’s a major bottleneck in production.

In automated data environments, especially those orchestrated by Airflow, a single failed Yeedu job running through airflow can bring an entire DAG to a halt. Downstream dependencies wait indefinitely; dashboards don’t update, and SLAs slip. Meanwhile, engineers scramble to diagnose the issue, resize clusters, and restart jobs often wondering how to debug long running Spark jobs or why a spark job is running forever despite seemingly adequate resources.

The result? Wasted time, wasted compute, and eroded confidence in your data pipelines. Scaling Spark efficiently shouldn’t be this hard.

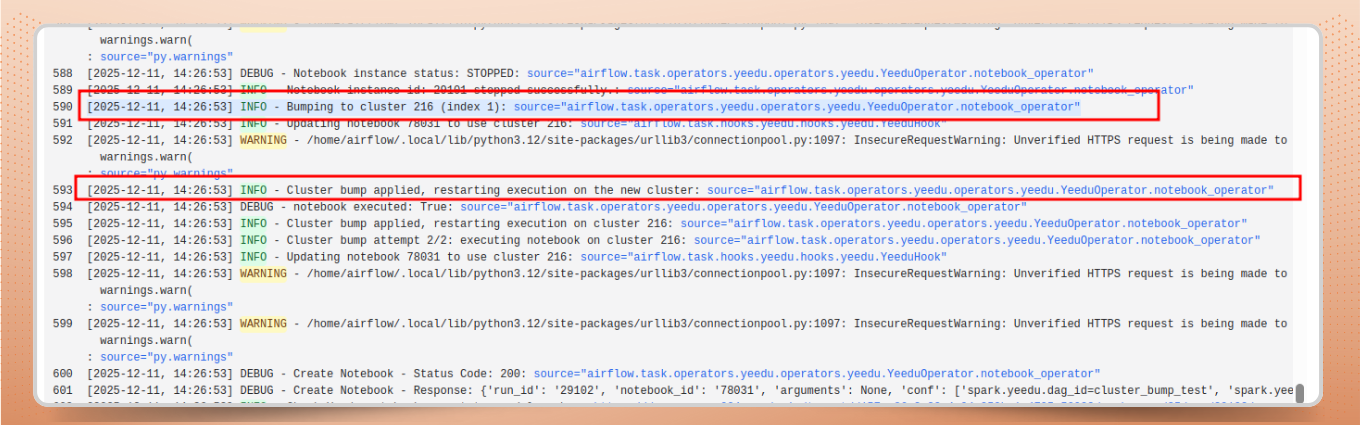

Yeedu introduces a new approach to Spark cluster autoscaling: automated detection and resubmission for Spark jobs that fail due to resource exhaustion.

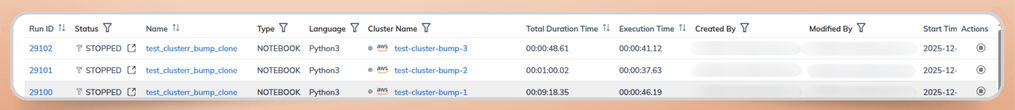

Instead of manual troubleshooting, Yeedu continuously monitors jobs and if it detects any job fails with resource limitations, Yeedu automatically triggers a new submission of the same job, this time on the next-level cluster configuration from provided list of already configured clusters and will remain on that once the job succeeds. This directly supports smarter, data-driven Spark performance optimization without human intervention.

For example, scales up from 4 cores / 16 GB (xsmall) → 8 cores / 32 GB (small) -> 16 cores / 64 GB (Medium). If job succeeds on 16 cores/64 GB configuration, for the upcoming runs, job will run on 16 cores / 64 GB itself a practical form of Spark performance optimization.

No human involvement. No Airflow DAG edits. No delay in pipeline recovery.

This self-healing mechanism eliminates the most painful part of debugging Spark jobs that transient resource limitations don’t derail your production workflows. Instead, Yeedu seamlessly retries them with the right amount of compute, removing much of the manual effort involved in debugging Spark jobs.

The result: you get automatic, data-driven scaling decisions, without guesswork or repeated manual tuning.

The best part? You don’t have to modify your existing DAGs.

Yeedu’s auto-resubmission logic is built into the Yeedu Airflow Operator, which wraps Spark jobs and notebooks executed through Airflow. Every task launched this way inherits the retry-on-resource-exhaustion behavior automatically.

From Airflow’s perspective, everything looks the same dependencies, success/failure states, and logs are preserved. Yeedu handles the underlying retries transparently, ensuring that only successful completions are reported back to Airflow.

This means your DAGs remain clean, your operators remain simple, and your pipelines stay resilient. Even better, it eliminates one of the most frustrating causes of production delays: airflow tasks failing in the middle of complex, multi-step workflows. Your Airflow orchestration continues as designed, while Yeedu ensures each airflow task has exactly the resources it needs to complete without engineers having to pause and ask how to debug long running Spark jobs mid-incident.

Building and maintaining reliable data pipelines is hard enough without firefighting infrastructure issues. Yeedu’s automated retry system addresses one of the most persistent pain points in Spark operations, i.e., unpredictable resource failures.

Here’s why it’s a game-changer for data engineering teams:

This is what modern, intelligent spark cluster optimization looks like.

Learn more about the Yeedu Airflow Operator and its reliability features at docs.yeedu.io.