Vendor lock-in happens when your data, tools, or workloads become dependent on one cloud or analytics platform, making migration expensive and complex. You can avoid vendor lock-in by using open data architectures, open file formats, cloud-agnostic pipelines, and interoperable tools that keep your data portable and your choices flexible.

Recent discussions on the evolution of the modern data stack highlight one enduring outcome: modular data architectures. Organizations can now choose best-of-breed solutions for each layer of their stack, improving flexibility and performance.

However, an often-overlooked risk comes with this freedom. The growing dependence on vendor-specific formats, SDKs, and tools can limit future choices. Proprietary solutions make it easy to get started, but adopting them is a long-term commitment that can lead to costly and complex migrations later especially as the risk of vendor lock-in in cloud computing becomes more evident.

Data teams often postpone migration plans, only to find themselves constrained by rising vendor costs and limited alternatives when they finally decide to act. For many, understanding how to avoid vendor lock-in becomes essential for long-term architectural flexibility.

Before we get into strategies to avoid vendor lock in, it’s useful to look at some examples of what vendor lock-in looks like in real environments.

Snowflake relies on FDN, a proprietary file format. While it supports Apache Iceberg, teams that continue using FDN face challenges when moving to other platforms.

Teradata uses its own SQL dialects – BTEQ and T-SQL, which often require extensive code refactoring and testing during migration.

Databricks simplifies development with magic commands and the dbutils API. Running the same workloads elsewhere can require significant changes if the target environment doesn’t support these features. Similarly, relying on the Hive Metastore instead of an external catalog can complicate migration. This is why Databricks migration becomes a major concern for teams planning long-term portability.

These examples show how seemingly minor choices can lock organizations into a specific ecosystem. The resulting tradeoffs may not be irreversible, but they often come at a high cost in time and risk.

The most effective way to avoid vendor lock in is by adopting open standards and maintaining a modular architecture. Together, these principles ensure every part of your stack can evolve independently without disrupting the rest. This is the foundation of a truly open data architecture.

Adopt open formats like Apache Iceberg or Delta Lake. They offer ACID transactions, schema evolution, and time travel while remaining vendor-neutral

Use standard cloud storage services such as AWS S3, Azure Blob Storage, or Google Cloud Storage with open file formats rather than proprietary ones.

Choose catalogs that are portable and accessible across environments, such as Polaris, Unity Catalog, AWS Glue Catalog, or Apache Hive Metastore.

Rely on open-source engines such as Apache Spark, Trino, or Apache Flink that can operate across multiple platforms key components of an open source data stack

Separate the layers of your data platform storage, compute, metadata, transformation, and orchestration. A well-decoupled design allows you to replace or upgrade any component without disrupting the entire system.

A vendor-neutral architecture might look like this:

This approach provides long-term flexibility, helping you maintain control over your data and infrastructure decisions while minimizing the vendor lock-in problem in cloud computing.

Reducing dependence on a single cloud provider gives you flexibility to match workloads with the best platform for performance or cost. A multi-cloud strategy allows you to shift resources based on business needs rather than vendor constraints. This is one of the most reliable ways to avoid cloud vendor lock in.

That said, multi-cloud success depends on careful design. The goal is not to duplicate environments but to optimize workloads for each platform while maintaining portability.

Lack of visibility into usage and costs is one of the biggest drivers of lock-in. Consumption-based pricing can quickly escalate without clear monitoring—creating hidden dependencies that slow down future migrations.

Transparent metrics help you manage workloads, optimize spending, and make informed decisions when evaluating alternatives, especially when assessing cloud cost optimization solutions.

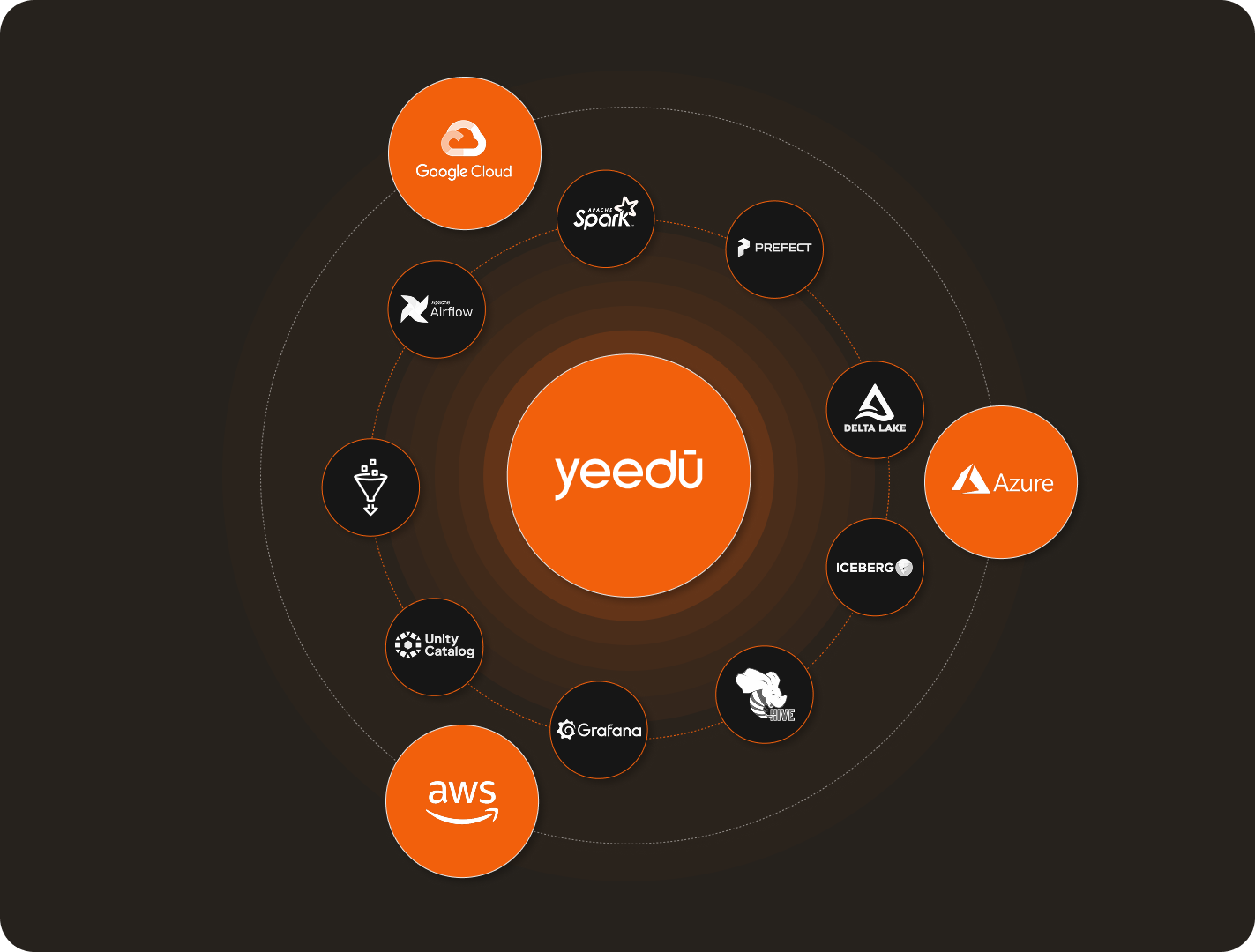

Yeedu is built around the principle of vendor independence

Based on Apache Spark, Yeedu ensures compatibility with the wider data ecosystem and avoids proprietary compute constraints.

Integrates with open-source orchestrators like Apache Airflow and Prefect, allowing you to keep your preferred workflow tools.

Provides built-in observability through Grafana for transparent workload monitoring and optimization.

Supports both Delta Lake and Apache Iceberg, letting you choose the format that fits your needs.

Works seamlessly with Unity Catalog, Hive Metastore, or AWS Glue Catalog, preserving existing catalog infrastructure.

Yeedu provides support for all major clouds – AWS, Azure, and Google Cloud – from a single control plane. Users can choose any cloud environment to set up clusters and run their jobs, making it easier to avoid vendor lock in across cloud providers.

Yeedu supports Databricks features such as magic commands, dbutils, and Unity Catalog, and offers automated migration tools that simplify the transition of hundreds of jobs.

Avoiding vendor lock-in requires intentional architectural decisions and continuous awareness. The most forward-looking data teams treat vendor independence as a design principle, not a reaction to problems.

Modern platforms that embrace open-source technologies while providing enterprise-grade performance and predictable pricing represent the next phase of data architecture. They enable organizations to retain governance and infrastructure investments while gaining the agility to optimize cost and performance.

The real question isn’t whether you should prioritize vendor independence it’s whether you can afford not to. In an era of rising data processing costs and rapidly evolving platforms, flexibility is no longer optional. It’s a competitive advantage and a cornerstone of sound engineering strategy.